市场的真相:为什么有的国家富有,有的国家贫穷

市场的真相:富国与穷国背后的逻辑 —— 解读 John Kay 的思考 在经济发展的世界地图上,我们看到的是一道难解的鸿沟:有些国家富裕昌盛,民众享受高质量生活;而另一些国家却长期陷于贫穷、动荡与落后。为什么世界如此不平等?是什么决定了一个国家能否富有? 这是 John Kay 在《市场的真相:为什么有的国家富有,有的国家贫穷》(The Truth About Markets: Why Some Nations are Rich but Most Remain Poor)中试图回答的问题。这本书不是一本晦涩的经济学教科书,而是一本面向公众、兼具深度与洞察力的经济学通识著作。 一、市场不是万能公式,而是复杂机制 John Kay 的第一个核心观点是:市场不是“自然状态”,而是一种高度制度化的社会机制。 许多关于市场的“神话”——自由市场带来最优配置、私人企业必然高效、政府干预一定失灵——在现实中并不总是成立。Kay 强调,成功的市场经济背后,依赖于法律、信任、透明制度、竞争机制与社会规范的共同支撑。 “市场经济不是自由的奇迹,而是制度演化的结果。” 这使得我们不能将西方的市场逻辑简单复制到发展中国家,忽视了其背后的制度环境差异。 市场中,卖家比买家更了解商品特性。商标、广告、商誉和管理机构等社会制度,确保宾馆的舒适性、麦当劳的可预测性、医生的胜任性以及银行的偿付能力。 这些制度中,有一些是个人自发行为产生的,还有一些是政府成立的。这两种类型往往相互作用:厂家的商标是自发行为,但如果没有法规禁止竞争者使用同样的商标,那商标便毫无意义。 二、国家之间差异的根源:制度与路径依赖 为什么有些国家长期富裕?Kay 并不简单归因于自然资源或殖民历史,而强调“制度质量”和“路径依赖”。 他指出,富国往往拥有: 稳定、透明的法治体系 高效的行政能力 鼓励创新与竞争的市场结构 信任机制与社会资本 相反,贫穷国家面临的困境常常来自: 腐败和权贵寻租 法律执行不力 过度集权或政商勾结 对外部“改革”方案的照搬照抄 制度不是短期内可以复制的“配方”,而是长期历史、文化与政治演进的结果。 三、市场不是目标,而是手段 Kay 强调,**经济增长不应被当作终极目标,而应关注人类福祉的提升。**他警惕经济学的“形式主义倾向”——用数学模型解释一切,而忽视现实社会的复杂性。 “我们追求增长,是因为它改善人的生活,而不是为了GDP本身。” 他批判了“市场至上”的原教旨主义,也反对将政府视为万恶之源。真正的问题不是市场还是政府,而是如何构建激励、治理与文化兼容的体系,激发创造力与社会协作。 四、现实世界需要“多样性与渐进改革” 最后,Kay 呼吁打破对“单一经济模式”的迷信。他指出,不同国家应根据本国历史、文化与社会结构,探索渐进式的制度变革路径,而非盲目跟风全球化或自由化口号。 “发展没有万能模板,只有不断试错与本土智慧。” 他以东亚国家(如日本、韩国、台湾)的成功经验为例,强调“强国家+有效市场”的混合模式,在现实中往往比理想化的自由市场更有效。 结语:回归常识,理解经济的本质 《市场的真相》并不提供惊人的新理论,而是用常识、历史与现实提醒我们:市场的成功依赖于制度设计与文化土壤;富裕不是资本的堆积,而是组织、信任与协作能力的体现。 这本书适合所有对经济、发展与社会变迁感兴趣的人,无论是学者、政策制定者,还是普通读者。它带我们走出经济学的象牙塔,回到人类社会的真实脉络中,看见市场背后的结构性真相。 如需进一步深入书中案例或章节概要,我也可以为你继续整理。是否需要我整理一份章节导读?

《纳瓦尔宝典》思想概述及经典语录

作者简介: 纳瓦尔·拉维坎特(Naval Ravikant)是硅谷知名创业家、投资人和思想家,他以对财富、幸福和个人成长的独特见解而闻名。他的观点融合哲学、商业和心理学,强调长期价值的积累、内在幸福感以及高效思考。 “The Almanack of Naval Ravikant: A Guide to Wealth and Happiness” 中文通常翻译为:《纳瓦尔宝典:通往财富与幸福的指南》。 这是一本由Eric Jorgenson整理编辑,基于纳瓦尔·拉维坎特的言论、推文和访谈内容汇编而成的书籍,系统总结了他的思想精华。 纳瓦尔经典语录 追求财富和幸福,而非金钱或地位。 和有长远眼光的人玩长期的游戏。参与迭代游戏,经营长期关系,成为乐于助人的人。 如果不想与一个人共事一生,那就一天都不要和他共事。 坚持读书的习惯。学习经济学、博弈论、心理学、数学和计算机。避免追求任何的社群认同,把真理置于其上。 如果不会写代码,就写书、写博客,录视频、录播客。 只选择重要而正确的事,然后全力以赴。永远考虑时间的机会成本。 不过,共事的人和工作的内容比努力程度更重要。通过真实摆脱竞争。 在重大决定上花更多的时间:在哪里生活,和谁一起,做什么事。 政治、学术和社会地位都是零和博弈。正和游戏才能造就积极向上的人。 零和博弈培养阴谋家,正和博弈培养阳谋家。 幸福是一种选择,一种技能,你可以专注于学习这项技能。 现代斗争:孤独的个体召唤出非人的意志,阅读、冥想、锻炼……对抗垃圾食品、标题党新闻、游戏沉迷机制。 终极自由是不做任何事的自由。 找到自己的专长:无法通过培训获得的知识。 如果你对纳瓦尔的思想感兴趣,可以阅读他的访谈、推文和播客,这些都能让你对财富、幸福与自由有更深的理解。

与世间喧闹擦肩而过

引 少年游 微灯照壁,疏钟隔院,风递几声蝉。 冷香浮影,砂纸叩安,心舒与卷眠。 尘嚣处,市声如海,难得片时闲。 却向幽斋,半窗风月,独坐理心弦。 问君何事,肯将热浪,悄悄绕身边? 万象纷然,谁知冷处,自有旧诗篇。 茶未凉,意还清远,不羡闹中烟。 但愿余年,山林可隐,世事不相牵。 有时,我常想,若人生是一场旅途,是否我们并不总要奔赴最热闹的集市,而应允许自己在路旁的小径上多停留一会儿。 世间的喧闹,总是来势汹汹。自媒体、短视频如潮水般涌来,争夺着每一个人的注意力。算法精准地勾画人性,填满碎片时间的每一隅,似乎不给人片刻喘息的机会。然而就在这片喧嚣的背面,书籍静静地待在角落,不被追捧,少人问津。它们变得便宜、可及,却依然承载着深邃的思想与厚重的经验。在大多数人选择喧闹的此刻,书籍反而成为了被低估的珍宝。 这让我明白:“擦肩而过”并不是无视,而是有意识地选择离开热潮,去寻找真正的价值。 展 再想一想,我们是否还曾在其他方面“与喧闹擦肩而过”,却因此得到了更多的宁静与清晰? 一、旅游:避开网红景点,深入人少之处 如今的旅行,不去“打卡”网红地似乎就不算到此一游。人群汹涌,拍照需排队,每个角度都早已被复制千遍。然而真正触动心灵的往往不是那些热闹场所,而是在偏远村落、山间小道,甚至一片人迹罕至的林间清晨。与喧闹景点擦肩而过,换来的,是一场安静而真实的相遇。 二、消费:避开“流量爆款”,选择冷门佳品 电商时代,热销商品往往被标榜为“必买爆款”。然而越是流行,越可能为迎合而妥协质量,价格虚高、同质化严重。反而是那些低调冷门的小品牌或手作产品,性价比高,品质稳定。在一次次“擦肩而过”的购物决策中,我们慢慢学会用心去挑选真正适合自己的东西。 三、职场:不卷最热门岗位,做擅长又可持续的工作 当AI工程师、产品经理、运营岗位成为热潮,许多人挤破头入场,却不一定适配、不一定快乐。反而是那些被冷落的工种,比如独立研究者、创作者、教育、工艺制造,在边缘中孕育稳定与深度。与热门职位擦肩而过,不是放弃竞争,而是选择适合自己的节奏和价值实现路径。 四、音乐:远离热门流行,拥抱冷门独立音乐 主流音乐榜单上总是充斥着大量热门歌曲,被大批人重复播放。但那些冷门的独立音乐人、地下乐队,他们的作品往往更具个性和深度。与流行擦肩而过,收获的是更纯粹的艺术体验。 五、社交:避免过度社交,选择深度陪伴 在社交网络高度活跃的今天,朋友圈、微信群刷屏不断,人际关系变得浅薄且杂乱。与其被无休止的“社交喧嚣”淹没,不如选择几位知己深度交流,擦肩而过的是浮躁,获得的是真诚与支持。 六、饮食:避开快餐连锁,品味家常手作 快节奏生活催生了各种快餐连锁,味道标准化却缺少温度。避开这些被广泛消费的“喧闹餐饮”,去尝试一家街角的老店或者自己动手做饭,感受美食的质朴与健康。 七、阅读:远离热点新闻,关注经典与深度报道 新闻资讯铺天盖地,热点事件反复发酵,易让人疲惫焦虑。选择远离爆炸式新闻轰炸,阅读经典著作或深度调查,反而能沉淀思考,获得更有价值的信息。 八、购物:避开打折促销狂潮,理性消费 电商节日促销时各种折扣铺天盖地,人们易陷入冲动消费。与这类喧闹擦肩而过,冷静分析自己的真实需求,理性消费,才能避免买回一堆用不上的“心头好”。 九、运动:避开健身房人满为患,拥抱自然运动 城市里热门的健身房常常爆满,嘈杂又拥挤。而跑步、骑行、徒步等户外运动,虽然人少,却更能接近自然,带来身心的放松与愉悦。 十、理财:不盲目跟风投资,选择稳健规划 股市、加密货币、各种理财产品的“热点”层出不穷,投资者容易被市场噪音影响盲目跟风。与热点理财产品擦肩而过,采取稳健、长期规划的策略,更利于财富的稳步增长。 结 走过许多热闹之地,才知寂静处自有风景。不是所有的热闹都值得追随,不是所有的冷清都无用。在这个被“注意力经济”推着前行的时代,愿我们都有勇气与世间喧闹擦肩而过,去发现那些被忽略的美好与深意。 我们不必拒绝热闹,但可以选择不被其吞噬。人生最难得的清醒,或许正藏在这一次次“擦肩而过”之中。

Top 10 LLM and RAG labs

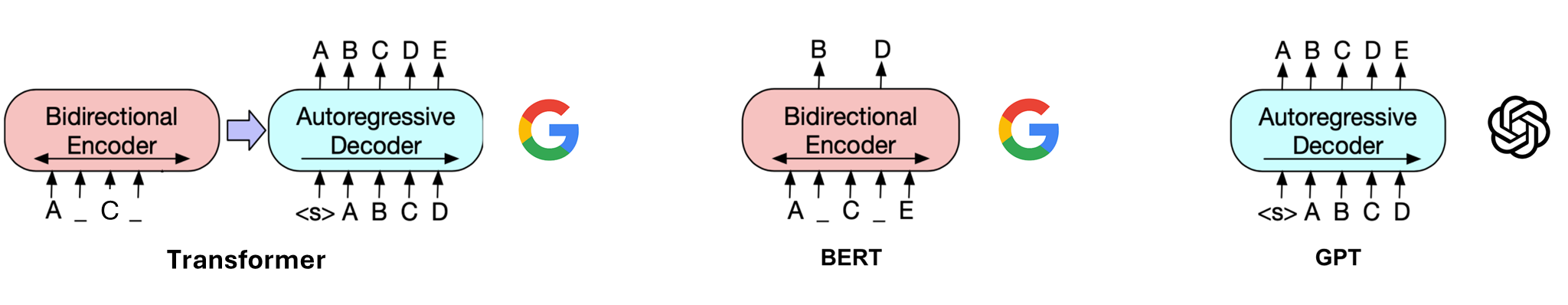

Here’s a summary of some of the top AI labs working on Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG): 1. OpenAI Focus: Pioneers in LLM development, notably the GPT series (e.g., GPT-4). Key Contributions: Developed GPT-3 and GPT-4, which are foundational models for many LLM applications. Active work on aligning AI systems with human intentions (e.g., reinforcement learning from human feedback, RLHF). Introduced advancements in prompt engineering and fine-tuning. OpenAI’s models are often integrated with other tools, making them suitable for various tasks in industry and research. RAG Efforts: OpenAI explores methods for combining generative models with external information retrieval (e.g., their Codex for code generation integrates LLMs with search engines). 2. Anthropic Focus: AI safety, interpretability, and alignment in large-scale language models. Key Contributions: Development of Claude (Claude 1, 2, and 3), a family of conversational AI models. Research into “Constitutional AI” for making AI systems safer and more aligned. Focus on reducing AI’s susceptibility to misuse and improving transparency. RAG Efforts: Anthropic’s work often explores integration of language models with search engines and structured knowledge for more effective and safe AI responses. 3. DeepMind Focus: AI research across multiple domains, including reinforcement learning and generative models. Key Contributions: Development of Gopher, a large-scale language model, and Chinchilla, an efficient scaling approach for large models. Pioneering work in multi-modal AI, integrating vision, language, and reasoning. RAG Efforts: DeepMind explores enhancing models with structured knowledge, and RAG techniques, integrating external databases and dynamic knowledge retrieval, are an active research direction. 4. Meta (Facebook AI Research - FAIR) Focus: Large-scale machine learning models, including vision, language, and multi-modal research. Key Contributions: Development of LLaMA (Large Language Model Meta AI), focusing on creating efficient, open-access language models. Exploration of dense retrieval and RAG models, especially in the context of open-domain question answering. Meta has been working on improving the accessibility and efficiency of LLMs, along with techniques for using external knowledge to enhance model performance. RAG Efforts: Meta’s work on dense retrieval systems complements RAG, enabling models to access relevant information from external knowledge bases during generation. 5. Google DeepMind and Google Research Focus: Advanced AI models, including transformers, multimodal models, and AI for healthcare and language understanding. Key Contributions: Development of the BERT and PaLM models, foundational advancements in transformer architectures and language modeling. Work on Multimodal Retrieval-Augmented Generation, such as the T5 (Text-to-Text Transfer Transformer) and newer models like Gemini. Introduction of efficient retrieval systems for large-scale language models. RAG Efforts: Google has been a major player in integrating retrieval with generative models, as seen in their work on retrieval-augmented generation with T5 and more recently with models like Gemini. 6. Mistral AI Focus: Developing open-weight, state-of-the-art language models with an emphasis on efficiency and scaling. Key Contributions: Introduction of Mistral models, which are designed to be more efficient and adaptable in specific use cases, like retrieval-augmented tasks. Known for their work in distilling large models into smaller ones without significant performance loss. RAG Efforts: Mistral is exploring models that are optimized for both generative tasks and those that require retrieval from external knowledge sources, aligning with the growing importance of RAG. 7. Cohere Focus: Providing state-of-the-art LLMs for enterprise applications and improving efficiency in large-scale natural language understanding. Key Contributions: Cohere specializes in fine-tuning LLMs for specific tasks, such as summarization and classification, with a focus on multi-lingual models. Their models are widely used for NLP tasks in the enterprise sector. RAG Efforts: Cohere integrates knowledge retrieval systems to enhance the accuracy and relevance of its generative models, particularly in customer service and knowledge retrieval tasks. 8. EleutherAI Focus: Open research in large language models and democratizing AI access. Key Contributions: Development of GPT-Neo, GPT-J, and GPT-NeoX, open-source alternatives to models like GPT-3. EleutherAI has focused on scaling models and contributing to the open research community around large-scale transformers. RAG Efforts: EleutherAI’s open-source models often work in combination with external retrieval systems and are frequently used in open-domain QA tasks, integrating RAG principles. 9. Stanford and Berkeley AI Research Labs Focus: Advanced research in AI, deep learning, and natural language processing. Key Contributions: Stanford’s work on LLMs includes the development of models like STANFORDnlp and the famous BERT. Berkeley’s AI research focuses on integrating reasoning, multimodal models, and the use of external knowledge bases for model enhancement. RAG Efforts: These labs are heavily involved in research to improve retrieval-augmented generation, such as through Dense Retriever models and other information retrieval methods to improve LLM performance. 10. University of Washington (UW) Focus: AI and machine learning with an emphasis on text understanding, vision-language models, and large-scale learning. Key Contributions: Known for advancements in transformers, large-scale data usage, and model interpretability. RAG Efforts: UW’s research in retrieval and large-scale language models focuses on improving efficiency and integration with external sources to augment generated responses. Conclusion These labs represent a diverse range of approaches to advancing LLMs and RAG, from foundational model development and AI safety to integration with external information retrieval systems. RAG is becoming an increasingly important part of LLM research, as it enhances the generative capabilities of models by providing more accurate, contextually relevant information. The integration of search engines, structured knowledge bases, and other retrieval mechanisms helps AI models respond in a more informed and nuanced way. ...

Hugo + papermod

Hugo + papermod Hugo + papermod learning materials: https://kyxie.github.io/zh/blog/tech/papermod/ https://www.sulvblog.cn/posts/blog/hugo_toc_side/ https://dvel.me/posts/hugo-papermod-config/ https://docs.hugoblox.com/reference/markdown/ this is heading two